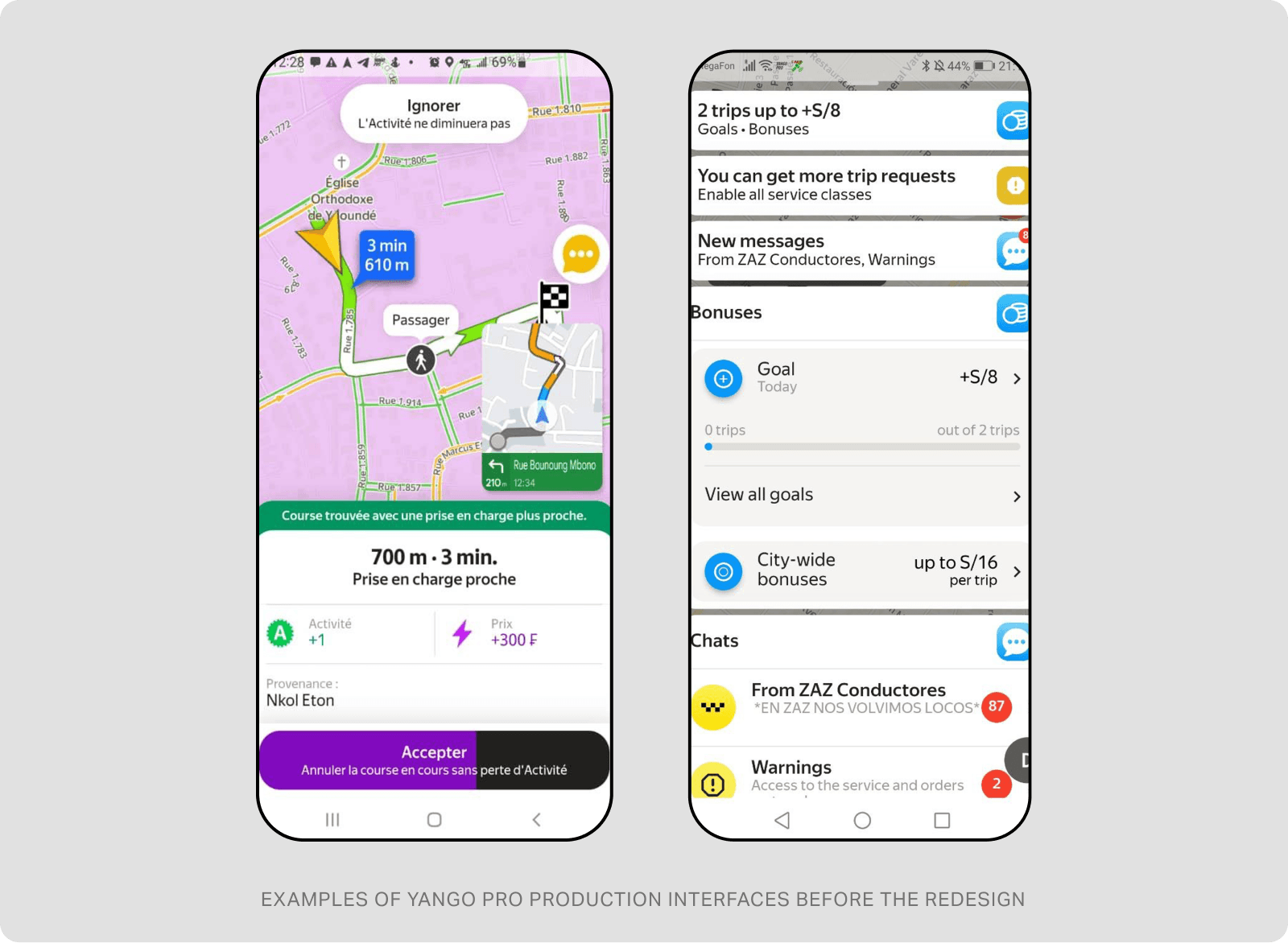

But as we entered new markets, especially in regions like Africa, we quickly realized that our app wasn’t exactly a one-size-fits-all solution. In some markets, drivers were using smartphones for the first time, and many weren’t familiar with the kind of tech-heavy interfaces we’d built. In others, like Latin America, the competition was fierce—drivers had a dozen apps to choose from, and they weren’t interested in spending time figuring out a complicated app like ours.We faced a tough decision: either invest in long-term user education (which felt like a black hole of time and resources) or simplify the app to meet the needs of these new markets. For us, the choice was clear. The redesign received a code name of Yango Pro Lite.Spoiler alert: simplifying the app was the right call, but it wasn’t as easy as we thought. Along the way, we made some mistakes—big ones. And that’s what this article is about.

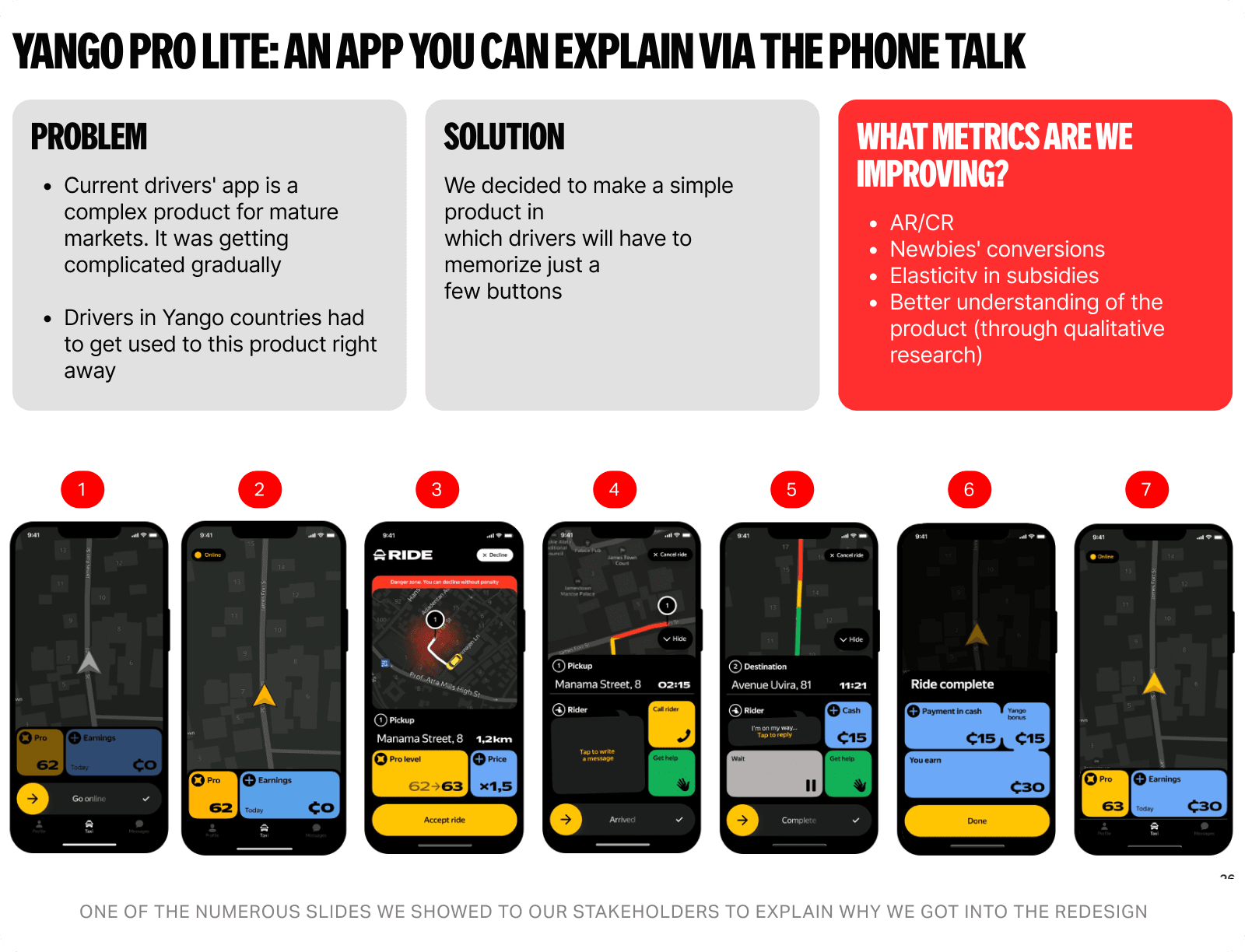

Mistake #1: We Didn’t Explain the "Why" to Our Stakeholders

Here’s the thing: as a product team, we lived this problem. We spent hours talking to drivers, watching them struggle with features, and seeing how our app just didn’t click for them. The solution felt obvious to us—simplify, simplify, simplify. So, we jumped right into it.But here’s where we messed up: we didn’t bring everyone else along for the ride. Our colleagues in business, marketing, and analytics were caught off guard. To them, it looked like we were throwing away years of hard work to rebuild something that, from their perspective, was already working just fine. This led to tension during prioritization meetings and resource allocation.How We Fixed It (and What We Learned):We realized that not everyone sees the problem the same way. If you don’t explain the why behind your decisions, people will naturally push back. So, we hit pause and did the work to bring everyone on the same page. We met with stakeholders one-on-one, put together a clear presentation with product metrics and clips from driver interviews, and spent a lot of time listening to their concerns.The lesson? Context is everything. Don’t assume everyone sees what you see. Take the time to explain the problem, share the data, and listen.

Mistake #2: We Changed Too Much at Once (and Paid the Price)

Let’s be real: we knew how AB testing works. One change at a time, measure the impact, rinse, repeat. That’s how we usually roll when we’re launching a single feature or tweaking something small. But this wasn’t a small project—it was a full-blown redesign, and we were launching in multiple new markets every month. Time wasn’t exactly on our side.So, we did what felt like the only option: we rolled out changes in huge chunks. Sometimes, we’d change 3-4 major features at once. And, surprise, surprise—not all of those changes were winners. Metrics like driver churn and trip volume started to dip, and we had no idea which change was causing the problem. It was like trying to find a needle in a haystack.How We Fixed It (and What We Learned):We had to find a middle ground. Instead of changing everything at once, we started grouping changes into logical parts. For example, we’d test just the main screen redesign or just the order flow updates. It wasn’t perfect from a pure AB testing theory standpoint, but it worked for us. This approach helped us pinpoint problems faster and fix them without derailing the entire project.The lesson? Even when you’re under pressure, don’t throw testing best practices out the window. Find a way to break changes into manageable chunks—it’ll save you a lot of headaches (and bad metrics) down the road.

Mistake #3: We Got Stuck in AB Test Limbo

Here’s a scenario we didn’t see coming: some of our tests didn’t move the needle. At all. Some of the metrics we were hoping to improve stayed exactly the same. No improvement, no deterioration. Just… flat.This left us in a weird spot. If the changes weren’t making things better, why roll them out? We started second-guessing ourselves, delaying decisions, and letting tests run for months, hoping for some magical new insight to appear. In the process, we lost sight of the bigger picture: our main goal wasn’t to improve one specific metric—it was to simplify the app and create a foundation for future launches in new markets.How We Fixed It (and What We Learned):We had to make some tough calls. Even when a change didn’t show immediate metric improvements, we rolled it out if it aligned with our core goal of simplification. The hardest part? Explaining this to stakeholders. Why spend resources on something that doesn’t move the numbers? It took a lot of persuasion and reminding everyone of the why behind the redesign (see Mistake #1 for how important that is).The lesson? Not every decision will show immediate results, and that’s okay. Sometimes, you have to trust the process and focus on the long-term vision—even if it means rolling out changes that don’t “win” in the short term.

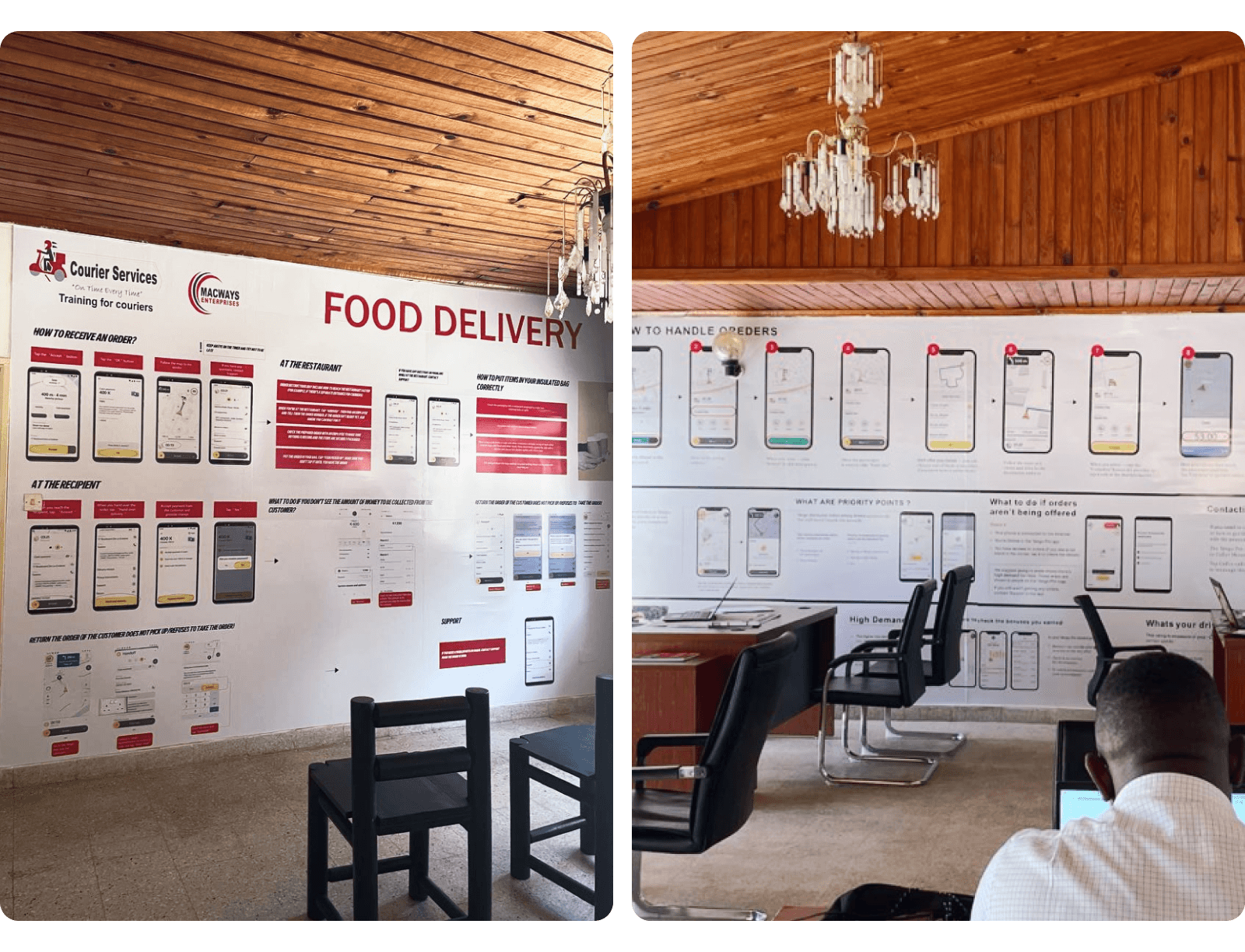

Mistake #4: We Made Life Harder for Other Teams

Here’s something we didn’t fully consider: long AB tests don’t just impact the product team—they create a ripple effect. While we were busy testing multiple versions of the app, our colleagues in support and marketing were drowning in extra work. They had to create different versions of marketing materials, support scripts, and ads for each variation of the product. Essentially, their workload doubled.How We Fixed It (and What We Learned):We realized we had to hit the brakes. We asked our teams to stop duplicating efforts where possible and wait for us to finalize the changes. This meant making some tough calls, like delaying a marketing campaign. It wasn’t ideal, but it gave everyone a chance to pause, regroup, and focus on what really mattered.The lesson? AB tests don’t exist in a vacuum. When you’re running multiple variations, think about how it impacts other teams. Sometimes, slowing down and simplifying processes for everyone is better than pushing forward at full speed.Disclaimer: This article was written by a Yangoer as part of our internal content initiative to share real work stories and insights. The views expressed are personal and intended for informational purposes only. They do not necessarily represent the views of Yango or its affiliates. Any mentions of tools, features, or processes reflect a specific point in time.